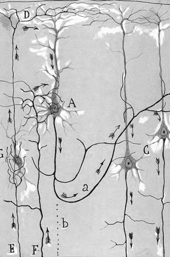

Most neurocomputational models are not hard-wired to perform a task. Instead, they are typically equipped with some kind of learning process. In this post, I'll introduce some notions of how neural networks can learn. Understanding learning processes is important for cognitive neuroscience because they may underly the development of cognitive ability.

Let's begin with a theoretical question that is of general interest to cognition: how can a neural system learn sequences, such as the actions required to reach a goal?

Consider a neuromodeler who hypothesizes that a particular kind of neural network can learn sequences. He might start his modeling study by "training" the network on a sequence. To do this, he stimulates (activates) some of its neurons in a particular order, representing objects on the way to the goal.

After the network has been trained through multiple exposures to the sequence, the modeler can then test his hypothesis by stimulating only the neurons from the beginning of the sequence and observing whether the neurons in the rest sequence activate in order to finish the sequence.

Successful learning in any neural network is dependent on how the connections between the neurons are allowed to change in response to activity. The manner of change is what the majority of researchers call "a learning rule". However, we will call it a "synaptic modification rule" because although the network learned the sequence, it is not clear that the *connections* between the neurons in the network "learned" anything in particular.

The particular synaptic modification rule selected is an important ingredient in neuromodeling because it may constrain the kinds of information the neural network can learn.

There are many categories of mathematical synaptic modification rule which are used to describe how synaptic strengths should be changed in a neural network. Some of these categories include: backpropgration of error, correlative Hebbian, and temporally-asymmetric Hebbian.

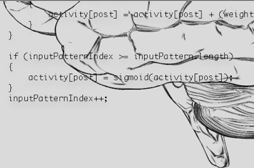

- Backpropogation of error states that connection strengths should change throughout the entire network in order to minimize the difference between the actual activity and the "desired" activity at the "output" layer of the network.

- Correlative Hebbian states that any two interconnected neurons that are active at the same time should strengthen their connections, so that if one of the neurons is activated again in the future the other is more likely to become activated too.

- Temporally-asymmetric Hebbian is described in more detail in the example below, but essentially emphasizes the importants of causality: if a neuron realiably fires before another, its connection to the other neuron should be strengthened. Otherwise, it should be weakened.

Why are there so many different rules? Some synaptic modification rules are selected because they are mathematically convenient. Others are selected because they are close to currently known biological reality. Most of the informative neuromodeling is somewhere in between.

An Example

Let's look at a example of a learning rule used in a neural network model that I have worked with: imagine you have a network of interconnected neurons that can either be active or inactive. If a neuron is active, its value is 1, otherwise its value is 0. (The use of 1 and 0 to represent simulated neuronal activity is only one of the many ways to do so; this approach goes by the name "McCulloch-Pitts").

News about a neuroimaging group's

News about a neuroimaging group's  Most researchers in neuroscience use animal models.

Most researchers in neuroscience use animal models. Most neuroscience writing touts statements like 'the human brain is the most complex object in the universe'. This serves only to paint the brain as a mysterious, seemingly unknowable structure.

Most neuroscience writing touts statements like 'the human brain is the most complex object in the universe'. This serves only to paint the brain as a mysterious, seemingly unknowable structure.

My

My  Time magazine has just published an

Time magazine has just published an  Several news articles have come out today which seem to imply that

Several news articles have come out today which seem to imply that  Normally, neuroscientists try to discover things about

Normally, neuroscientists try to discover things about

Freedom to choose is the first axiom of our being. We assume freedom with each action that we take, and we are annoyed when we are forced to act "against our will". A recent

Freedom to choose is the first axiom of our being. We assume freedom with each action that we take, and we are annoyed when we are forced to act "against our will". A recent  I will follow up MC's recent post with a brief review of three other neuroscience-related blogs that are worth mentioning as we begin Neurevolution.

I will follow up MC's recent post with a brief review of three other neuroscience-related blogs that are worth mentioning as we begin Neurevolution. As the first post on Neurevolution, I would like to review several other neuroscience blogs that have been around for a while.

As the first post on Neurevolution, I would like to review several other neuroscience blogs that have been around for a while.